I have been looking through the Obama administration's midsession review, which was released a few days ago. I found the comparison between the adminstration's economic forecast and the Blue Chip consensus of private forecasters noteworthy. (See the table called "Table 3. Comparison of Economic Assumptions.") Here is the projected growth of real GDP, fourth quarter to fourth quarter, of Team Obama in red, and private forecasters in blue.

2012 2.6 2.0

2013 2.6 2.5

2014 4.0 3.1

2015 4.2 3.0

2016 3.9 2.9

Of course, the Administration's optimistic forecast feeds directly into its budget projection. If we get the slower growth that private forecasters predict, we will get less tax revenue and larger budget deficits than the Administration projects.

Tuesday, July 31, 2012

Friday, July 27, 2012

Thursday, July 26, 2012

Treasury/MBS Swaps

Arvind Krishnamurthy and Annette Vissing-Jorgensen have written this note, which makes an argument that a useful quantitative easing (QE) operation by the Fed would be a swap of Treasury bonds for mortgage-backed securities (MBS). This summarizes the argument:

Let's start with point #2 above. On page 3, Krishnamurthy and Vissing-Jorgensen state:

The problem is that it doesn't work that way in a liquidity trap (positive excess reserves and the interest rate on reserves driving all short-term interest rates). For example, under current circumstances, if the Fed swaps reserves for Treasury bonds, that implies no change in financial market liquidity, as the private sector's ability to intermediate T-bonds implies that the Fed's swap just nets out.

Now, move to point #1. The claim here is that we would prefer that the Fed have a portfolio of MBS rather than Treasury bonds. But what is the Fed supposed to be accomplishing by purchasing MBS? The case that some people make to justify the existence of Fannie Mae and Freddie Mac (currently under government "conservatorship") is that they are a boon to the mortgage market. Otherwise illiquid mortgages are sold to Fannie Mae and Freddie Mac. These mortgages can be held on the balance sheets of the GSEs, financed by liabilities which are perfect substitutes for government debt. They can, and are, packaged as MBS, which are tradeable, liquid securities. Why is it necessary that we go through another step and have these MBS intermediated by the Fed? They're not liquid enough already?

Maybe there is some risk associated with MBS (prepayment and default risk) that we don't see in Treasury bonds, that we are transferring from the private sector to the Fed with a Fed MBS purchase. But how does that work? The risk has to be borne by someone, and if the Fed holds the MBS, taxpayers bear the risk. Why is the Treasury any better at allocating risk in the private sector than are private financial institutions?

Likely Krishnamurthy and Vissing-Jorgensen's ideas will be used to support what the FOMC seems intent on doing, which is executing another QE operation, this time with MBS (as with QE1).

We make two main points:

1. Purchasing long MBS brings down long MBS yields by more than would an equal sized purchase of long Treasury bonds and thus is likely to create a larger stimulus to economic activity via a larger reduction in homeowner borrowing costs.

2. Purchasing Treasury bonds brings down Treasury yields, but part of this decrease indicates a welfare cost rather than a benefit to the economy. Thus it would be better to sell rather than purchase long-term Treasury bonds.

Let's start with point #2 above. On page 3, Krishnamurthy and Vissing-Jorgensen state:

...by purchasing long-term Treasury bonds the Fed shrinks the supply of extremely safe assets, which drives up a scarcity price-premium on such assets and lowers their yields. This latter channel is a welfare cost to the economy.I like that. It's exactly how an open market operation works in this paper. A swap of outside money for government debt, under the right conditions, makes liquidity more scarce in financial markets and increases its price - the real interest rate goes down. The price of government debt reflects a liquidity premium. But this kind of swap is not a good thing, as it makes financial trade less efficient.

The problem is that it doesn't work that way in a liquidity trap (positive excess reserves and the interest rate on reserves driving all short-term interest rates). For example, under current circumstances, if the Fed swaps reserves for Treasury bonds, that implies no change in financial market liquidity, as the private sector's ability to intermediate T-bonds implies that the Fed's swap just nets out.

Now, move to point #1. The claim here is that we would prefer that the Fed have a portfolio of MBS rather than Treasury bonds. But what is the Fed supposed to be accomplishing by purchasing MBS? The case that some people make to justify the existence of Fannie Mae and Freddie Mac (currently under government "conservatorship") is that they are a boon to the mortgage market. Otherwise illiquid mortgages are sold to Fannie Mae and Freddie Mac. These mortgages can be held on the balance sheets of the GSEs, financed by liabilities which are perfect substitutes for government debt. They can, and are, packaged as MBS, which are tradeable, liquid securities. Why is it necessary that we go through another step and have these MBS intermediated by the Fed? They're not liquid enough already?

Maybe there is some risk associated with MBS (prepayment and default risk) that we don't see in Treasury bonds, that we are transferring from the private sector to the Fed with a Fed MBS purchase. But how does that work? The risk has to be borne by someone, and if the Fed holds the MBS, taxpayers bear the risk. Why is the Treasury any better at allocating risk in the private sector than are private financial institutions?

Likely Krishnamurthy and Vissing-Jorgensen's ideas will be used to support what the FOMC seems intent on doing, which is executing another QE operation, this time with MBS (as with QE1).

What I've been reading

I am down here on Long Beach Island, NJ, visiting my mom with my younger son. It has given me a chance to catch up on some reading, and I have a recommendation to pass along: Unintended Consequences by Edward Conard. The subtitle (Why everything you've been told about the economy is wrong) is unnecessarily contentious and not really an accurate description. But the book, written by a former Bain partner, gives a good overview of the forces behind the financial crisis. It is far smarter and more thought-provoking than most economics written for the general public.

You can read the beginning of the book by clicking here. Be sure to check out Figure 1-6 on page 22, which I found quite illuminating.

You can read the beginning of the book by clicking here. Be sure to check out Figure 1-6 on page 22, which I found quite illuminating.

Wednesday, July 25, 2012

Structure, Lucas Critique critiquing, etc.

There has been some blog conversation about microfoundations, the Lucas critique, etc., recently, so I thought I would add my two cents. Noah Smith wonders how you are supposed to tell when your model satisfies the Lucas critique, and when it doesn't. Good question. Here's a quote from an economist who has been a strong supporter of the Lucas Critique:

But what's the big deal? Noah seems endlessly perturbed that economics is not like the natural sciences. There are no litmus tests that allow us to throw out bad theories so we can be done with them. But that makes economics fun. We have to be creative about using the available empirical evidence to reinforce our arguments. We have to be much more creative on the theoretical side than is the case in the natural sciences. We get to have interesting fights in public. Who could ask for more?

Here's another issue. Noah seems to think that the Lucas critique is a convenient bludgeon, designed for use by the right wing:

But the perception that Lucas critique arguments are more often used against arguments favoring government intervention than against those which do not is probably correct. Economic arguments that justify no government intervention are often easy to construct and understand. Justifying intervention is hard. Try to actually define what an externality is, or to construct a Keynesian model with all the proper working parts. Those things are difficult.

The sentence “My model is structural” as now used seems to me to be equivalent to “My model is good” or “My model is better than your model.”We're trying to construct models that are useful for analyzing the effects of policies. But if that's our intention, then those models should give us the right answers. What's structural? That depends on the model, and the policy, and we know that the model can't be perfect. Our ability to estimate - or calibrate - parameters is limited, and we have to leave some things out, otherwise we have something that is too complicated to understand. So we have to accept that any economic model is going to be imperfect in its predictions about policy. This is not going to be like using Physics to put people on the moon.

But what's the big deal? Noah seems endlessly perturbed that economics is not like the natural sciences. There are no litmus tests that allow us to throw out bad theories so we can be done with them. But that makes economics fun. We have to be creative about using the available empirical evidence to reinforce our arguments. We have to be much more creative on the theoretical side than is the case in the natural sciences. We get to have interesting fights in public. Who could ask for more?

Here's another issue. Noah seems to think that the Lucas critique is a convenient bludgeon, designed for use by the right wing:

This is just one more judgment call in macro. Which means one more place where personal and political bias can creep in. In the comments on my earlier post, someone wrote: "I think of the Lucas Critique as a gun that only fires left." What that means is that in practice, the Lucas Critique is generally brought up as an objection to models in which the central bank can stabilize output. Or in other words, consensus has a well-known hard-money bias.On the up side, this is certainly milder than some things I hear. Some people out there think that the entire post-1970 research program in macroeconomics is a right-wing bludgeon. As I point out in this post, the Lucas critique was as much a problem for the money demand function as for the Phillips curve, and the quantity theorists certainly were (are) not lefties. As well, I'm sure that Paul Krugman would be all in favor of structuralism if there existed a watertight theory of nominal wage and price rigidity. Then we would be spared stuff like this:

But what if you have an observed fact about the world — say, downward wage rigidity — that you can’t easily derive from first principles, but seems to be robust in practice? You might think that the right response is to operate on the provisional assumption that this relationship will continue to hold, rather than simply assume it away because it isn’t properly microfounded — and you’d be right, in my view.Krugman understands that if he said that at a serious economics conference, or wrote it in a paper submitted to a serious economics journal, that he would probably be given a hard time - and that would be right, in my view. This is part of what we do to sift ideas. The theory has to be convincing.

But the perception that Lucas critique arguments are more often used against arguments favoring government intervention than against those which do not is probably correct. Economic arguments that justify no government intervention are often easy to construct and understand. Justifying intervention is hard. Try to actually define what an externality is, or to construct a Keynesian model with all the proper working parts. Those things are difficult.

QE3 Talk

There is plenty of talk, coming from Fed officials and mainstream media (NYT and WSJ, for example) that the Fed is thinking about more QE, and will announce it, either after its meeting next week, or in September.

Rightly or wrongly, the Fed has made clear how it interprets the dual mandate from Congress. The first part of the mandate, "price stability," translates to 2% inflation. The second part, "maximum employment," means concern with the labor market more generally, and often particularly with the unemployment rate. How are we doing? On the first part of the mandate, we're south of the target, as you can see in the next chart. The chart shows the inflation rate, as measured by 12-month percentage increase in the pce deflator, and the core pce deflator. The Fed has made clear that its interest is in the raw pce inflation measure. In any case, both measures are below 2%, though not by much for the core measure. On the second part of the mandate, the recent surprises have been on the down side. Employment growth is lower than expected and the unemployment rate is not falling as expected. Nothing is settled in Europe, and fiscal policy in the United States is currently confused and confusing.

Any Taylor-rule central banker would be pushing for more monetary accommodation. But what does the Fed have available that can allow it to be more accommodating? Other than QE, here are some other possibilities:

1. Reduce the interest rate on reserves (IROR) to zero: The FOMC would announce this as a reduction in the fed funds rate target to 0%, instead of a target range of 0-0.25%, but the Board of Governors would actually have to reduce the IROR to make a difference, as it's the IROR that drives the fed funds rate under current conditions. But the Fed will not reduce the IROR to zero. Its concern is with money market mutual funds (MMMFs). With IROR at 0.25%, there seems to be enough of an interest rate differential that MMMFs can eek out a profit and retain their contracts which fix the value of a MMMF share at $1. The concern is that, if the IROR goes to zero, then there would be an exodus from MMMFs, as shareholders would fear that these MMMFs were about to "break the buck." MMMFs intermediate a lot of commercial paper, for example, so the resulting disintermediation would be "disruptive." I'm not sure that these concerns make any sense, but I'll write about that another time.

2. More forward guidance: Here's how this reads in the last FOMC statement, from June 20:

So that leaves us with QE. If you have been reading these posts, you know I think QE does not matter, under current conditions. But suppose you think it does. Why would the Fed think it needs to accommodate more? It already did so at the last meeting in June, when it extended "Operation Twist" to the end of the year. Implicitly, there seems to be a view that "Operation Twist" is a milder version of QE, maybe because it does not change the size of the Fed's balance sheet. But Operation Twist and QE are actually the same thing. What matters (if you buy into QE at all) is the composition of the outstanding debt of Fed and the Treasury, and Operation Twist and QE change that composition in essentially the same way. Operation twist is a swap of short-maturity government debt for long-maturity debt, and QE is a swap of reserves for long maturity government debt (or mortgage backed securities - more about that shortly). Is there any difference between short-term government debt and reserves currently? No, not much. So Operation Twist and QE are the same thing.

But maybe the Fed thinks it was too hasty and should do more? Maybe that's what John Williams is talking about when he says there should be an

Here's another puzzler. The article I link to above, on John Williams, says:

1. Large-scale purchases of Treasuries by the Fed could be disruptive, and large scale purchases of mortgage-backed securities are not.

2. There is a larger effect (on something) of a given purchase of assets by the Fed if the assets are mortgage-backed securities than if the assets are Treasuries.

On the first claim: I ran across a discussion of "disruption" in the minutes from the last FOMC meeting:

In any case, the Fed appears to do its Treasury purchases in the on-the-run market. Suppose we think that, if the Fed is more active in the market for on-the-run Treasuries, that this makes these on-the-run Treasuries hard to acquire for private sector purchasers? Why is that a problem? Isn't that the whole idea?

On the second claim above, that purchases of mortgage-backed securities (MBS) by the Fed should matter more than purchases of Treasuries, I don't see it. According to the theory the Fed seems to have in mind (to the extent it has anything on its collective mind at all) QE works because of market segmentation. You buy long-term assets and sell short-term ones, and this pushes up the price of the long-term assets, according to Ben Bernanke. But why should it make any difference whether the Fed purchases MBS or Treasuries? That would appear to be the same segment of the market. The MBS market is dominated by Freddie Mac and Fannie Mae, now government-owned enterprises. The MBS they issue are generic products, and one does not have to think much about what one is buying, just as one does not have to think much about what one is buying in the Treasury market. That's why the Fed is buying MBS and not corporate bonds. It doesn't require much thinking. More bang for the buck with MBS? I don't think so.

In my quest for actual research on why QE might matter, I ran across this paper by Mark Gertler and Peter Karadi. Here's something I like in the paper:

Gertler and Karadi do their best to construct a model where that is the case. There are financial frictions that gum up the private sector's ability to do something like QE. When the Fed does QE, that can be beneficial because the Fed is better at this activity than is the private sector, in the Gertler-Karadi model. It's a bit of a chicken model, and I'm not convinced, but basically they have the right idea. If you want to explain why QE matters, you have to show why the Fed is better than the private sector at turning long-term safe debt into short-term safe debt.

Rightly or wrongly, the Fed has made clear how it interprets the dual mandate from Congress. The first part of the mandate, "price stability," translates to 2% inflation. The second part, "maximum employment," means concern with the labor market more generally, and often particularly with the unemployment rate. How are we doing? On the first part of the mandate, we're south of the target, as you can see in the next chart. The chart shows the inflation rate, as measured by 12-month percentage increase in the pce deflator, and the core pce deflator. The Fed has made clear that its interest is in the raw pce inflation measure. In any case, both measures are below 2%, though not by much for the core measure. On the second part of the mandate, the recent surprises have been on the down side. Employment growth is lower than expected and the unemployment rate is not falling as expected. Nothing is settled in Europe, and fiscal policy in the United States is currently confused and confusing.

Any Taylor-rule central banker would be pushing for more monetary accommodation. But what does the Fed have available that can allow it to be more accommodating? Other than QE, here are some other possibilities:

1. Reduce the interest rate on reserves (IROR) to zero: The FOMC would announce this as a reduction in the fed funds rate target to 0%, instead of a target range of 0-0.25%, but the Board of Governors would actually have to reduce the IROR to make a difference, as it's the IROR that drives the fed funds rate under current conditions. But the Fed will not reduce the IROR to zero. Its concern is with money market mutual funds (MMMFs). With IROR at 0.25%, there seems to be enough of an interest rate differential that MMMFs can eek out a profit and retain their contracts which fix the value of a MMMF share at $1. The concern is that, if the IROR goes to zero, then there would be an exodus from MMMFs, as shareholders would fear that these MMMFs were about to "break the buck." MMMFs intermediate a lot of commercial paper, for example, so the resulting disintermediation would be "disruptive." I'm not sure that these concerns make any sense, but I'll write about that another time.

2. More forward guidance: Here's how this reads in the last FOMC statement, from June 20:

the Committee decided today to keep the target range for the federal funds rate at 0 to 1/4 percent and currently anticipates that economic conditions--including low rates of resource utilization and a subdued outlook for inflation over the medium run--are likely to warrant exceptionally low levels for the federal funds rate at least through late 2014.Early forward guidance language (e.g. in 2009) was more vague, mentioning only an "extended period" of low interest rates. The extended period became an explicitly specified period, and that period was lengthened to extend to late 2014. The idea behind forward guidance is that expectations about future monetary policy are important for determining the current inflation rate, for example. So monetary policy can affect inflation and perhaps real activity without the Fed actually taking any current action. All it needs to do is make commitments about future policy. Thus, the Fed could in principle be more accommodative by changing the late 2014 date to something else - maybe late 2015. The problem here is that the Fed has made clear that the "late 2014" language is something it is willing to change at will. If future events made tightening look desirable before the end of 2014 arrived, the Fed would be willing to move the date up, apparently. As a result the forward guidance language no longer has any meaning. It's not a commitment, so it's irrelevant.

So that leaves us with QE. If you have been reading these posts, you know I think QE does not matter, under current conditions. But suppose you think it does. Why would the Fed think it needs to accommodate more? It already did so at the last meeting in June, when it extended "Operation Twist" to the end of the year. Implicitly, there seems to be a view that "Operation Twist" is a milder version of QE, maybe because it does not change the size of the Fed's balance sheet. But Operation Twist and QE are actually the same thing. What matters (if you buy into QE at all) is the composition of the outstanding debt of Fed and the Treasury, and Operation Twist and QE change that composition in essentially the same way. Operation twist is a swap of short-maturity government debt for long-maturity debt, and QE is a swap of reserves for long maturity government debt (or mortgage backed securities - more about that shortly). Is there any difference between short-term government debt and reserves currently? No, not much. So Operation Twist and QE are the same thing.

But maybe the Fed thinks it was too hasty and should do more? Maybe that's what John Williams is talking about when he says there should be an

...open-ended programme of QE.Indeed, it's always puzzled me that the FOMC has not conducted its asset purchase programs in the way it formerly targeted the fed funds rate. Why not fix the path for purchases until the next FOMC meeting, and revisit the issue at each subsequent meeting?

Here's another puzzler. The article I link to above, on John Williams, says:

If the Fed launched another round of quantitative easing, Mr Williams suggested that buying mortgage-backed securities rather than Treasuries would have a stronger effect on financial conditions. “There’s a lot more you can buy without interfering with market function and you maybe get a little more bang for the buck,” he said.There are two claims in the quote that are worthy of note:

1. Large-scale purchases of Treasuries by the Fed could be disruptive, and large scale purchases of mortgage-backed securities are not.

2. There is a larger effect (on something) of a given purchase of assets by the Fed if the assets are mortgage-backed securities than if the assets are Treasuries.

On the first claim: I ran across a discussion of "disruption" in the minutes from the last FOMC meeting:

Some members noted the risk that continued purchases of longer-term Treasury securities could, at some point, lead to deterioration in the functioning of the Treasury securities market that could undermine the intended effects of the policy. However, members generally agreed that such risks seemed low at present, and were outweighed by the expected benefits of the action.So what's that about? I'm not completely sure, but I think the issue is the following. People who trade in government securities seem to have a segmented view of the market for Treasuries. "On-the-run" government securities are newly-issued, and "off-the-run" are secondhand. Apparently the prices of on-the-run Treasuries tend to be higher than the prices of off-the-run Treasuries with the same duration. This difference in price is thought to be a liquidity premium, in that the market in on-the-run Treasuries is more liquid because it is thicker. To people who study market microstructure, this might make sense, but I think economists will have trouble explaining it. Why would we think of the market for Treasuries as segmented in this fashion? A secondhand Treasury is harder to price or trade than a new one? Why?

In any case, the Fed appears to do its Treasury purchases in the on-the-run market. Suppose we think that, if the Fed is more active in the market for on-the-run Treasuries, that this makes these on-the-run Treasuries hard to acquire for private sector purchasers? Why is that a problem? Isn't that the whole idea?

On the second claim above, that purchases of mortgage-backed securities (MBS) by the Fed should matter more than purchases of Treasuries, I don't see it. According to the theory the Fed seems to have in mind (to the extent it has anything on its collective mind at all) QE works because of market segmentation. You buy long-term assets and sell short-term ones, and this pushes up the price of the long-term assets, according to Ben Bernanke. But why should it make any difference whether the Fed purchases MBS or Treasuries? That would appear to be the same segment of the market. The MBS market is dominated by Freddie Mac and Fannie Mae, now government-owned enterprises. The MBS they issue are generic products, and one does not have to think much about what one is buying, just as one does not have to think much about what one is buying in the Treasury market. That's why the Fed is buying MBS and not corporate bonds. It doesn't require much thinking. More bang for the buck with MBS? I don't think so.

In my quest for actual research on why QE might matter, I ran across this paper by Mark Gertler and Peter Karadi. Here's something I like in the paper:

A popular view of LSAPs — known more broadly as "quantitative easing" — is that they reflect money creation. We instead argue that LSAPs should be seen as central bank intermediation. Just like private intermediaries the Fed has financed its asset purchases with variable interest bearing liabilities and not money per se. The difference of course is that that Fed’s liabilities are effectively government debt.The important idea here is that it is useful to think about QE as intermediation. That's the whole key to understanding why it does or does not matter. Though Gertler and Karadi want to make a distinction between QE and conventional monetary policy or "money creation," they should think of the latter as intermediation too. The key issue is that the intermediation the Fed does will only matter and be useful to the extent that the Fed can do it better than the private sector can.

Gertler and Karadi do their best to construct a model where that is the case. There are financial frictions that gum up the private sector's ability to do something like QE. When the Fed does QE, that can be beneficial because the Fed is better at this activity than is the private sector, in the Gertler-Karadi model. It's a bit of a chicken model, and I'm not convinced, but basically they have the right idea. If you want to explain why QE matters, you have to show why the Fed is better than the private sector at turning long-term safe debt into short-term safe debt.

Wednesday, July 18, 2012

What I've been watching

I am a latecomer to this, but a friend suggested to me that I would enjoy the TV show Breaking Bad, and boy was he right. It has occupied me almost every evening for the past month, as I have caught up to the current season five.

I am a latecomer to this, but a friend suggested to me that I would enjoy the TV show Breaking Bad, and boy was he right. It has occupied me almost every evening for the past month, as I have caught up to the current season five.The show tells the story of a particularly destructive mid-life crisis, as a mild-mannered high school teacher slowly descends into the underworld of drug manufacturing. It is not the best TV drama of all time (I would probably vote for The Wire), but it is close. It is important to watch in sequence, so be sure to start with season one.

Tuesday, July 17, 2012

Harry Reid's Wardrobe

Having learned that the U.S. olympic uniforms were manufactured in China, Senator Harry Reid said, "I think they should take all of the uniforms put them in a big pile and burn them and start all over again."

Will some enterprising reporter please ask Senator Reid for the opportunity to inspect the senator's closet and check the labels of his clothing to make sure they are all American-made? I look forward to seeing Mr. Reid's bonfire.

In the alternative, I would be happy to send the senator of copy of my favorite textbook. He should pay particular attention to Chapters 3 and 9.

Will some enterprising reporter please ask Senator Reid for the opportunity to inspect the senator's closet and check the labels of his clothing to make sure they are all American-made? I look forward to seeing Mr. Reid's bonfire.

In the alternative, I would be happy to send the senator of copy of my favorite textbook. He should pay particular attention to Chapters 3 and 9.

Saturday, July 14, 2012

The Progressivity of Taxes and Transfers

To update one of the tables for the next edition of my favorite textbook, I have been looking at the new CBO report on the distribution of income and taxes. I found the following calculations, based on the numbers in the CBO's Table 7, illuminating.

Because transfer payments are, in effect, the opposite of taxes, it makes sense to look not just at taxes paid, but at taxes paid minus transfers received. For 2009, the most recent year available, here are taxes less transfers as a percentage of market income (income that households earned from their work and savings):

Bottom quintile: -301 percent

Second quintile: -42 percent

Middle quintile: -5 percent

Fourth quintile: 10 percent

Highest quintile: 22 percent

Top one percent: 28 percent

The negative 301 percent means that a typical family in the bottom quintile receives about $3 in transfer payments for every dollar earned.

The most surprising fact to me was that the effective tax rate is negative for the middle quintile. According to the CBO data, this number was +14 percent in 1979 (when the data begin) and remained positive through 2007. It was negative 0.5 percent in 2008, and negative 5 percent in 2009. That is, the middle class, having long been a net contributor to the funding of government, is now a net recipient of government largess.

I recognize that part of this change is attributable to temporary measures to deal with the deep recession. But it is noteworthy nonetheless, as other deep recessions, such as that in 1982, did not produce a similar policy response.

Update: A reader points out the CBO's transfer data includes state and local transfers, but the tax data includes only federal taxes. If state and local taxes were included, or if state and local transfers were excluded, the middle quintile might well turn positive, though the CBO does not provide the data to establish that conclusion definitively.

Because transfer payments are, in effect, the opposite of taxes, it makes sense to look not just at taxes paid, but at taxes paid minus transfers received. For 2009, the most recent year available, here are taxes less transfers as a percentage of market income (income that households earned from their work and savings):

Bottom quintile: -301 percent

Second quintile: -42 percent

Middle quintile: -5 percent

Fourth quintile: 10 percent

Highest quintile: 22 percent

Top one percent: 28 percent

The negative 301 percent means that a typical family in the bottom quintile receives about $3 in transfer payments for every dollar earned.

The most surprising fact to me was that the effective tax rate is negative for the middle quintile. According to the CBO data, this number was +14 percent in 1979 (when the data begin) and remained positive through 2007. It was negative 0.5 percent in 2008, and negative 5 percent in 2009. That is, the middle class, having long been a net contributor to the funding of government, is now a net recipient of government largess.

I recognize that part of this change is attributable to temporary measures to deal with the deep recession. But it is noteworthy nonetheless, as other deep recessions, such as that in 1982, did not produce a similar policy response.

Update: A reader points out the CBO's transfer data includes state and local transfers, but the tax data includes only federal taxes. If state and local taxes were included, or if state and local transfers were excluded, the middle quintile might well turn positive, though the CBO does not provide the data to establish that conclusion definitively.

Friday, July 13, 2012

Anti-poverty programs raise effective marginal tax rates

Eugene Steuerle calculates the effective marginal tax rates from the system of taxes and transfers:

we calculate the effective average marginal tax rate if this household increases its income from $10,000 to $40,000. That is, how much of the additional $30,000 of earnings is lost to government through direct taxes or loss of benefits? The average marginal tax rate in the first bar of Table 3, 29 percent, is based simply on federal and state direct taxes, including Social Security and the EITC. The rate rises appreciably as the family enrolls in additional transfer programs in bars 2 and 3. For a family enrolled in all the more universal non-wait-listed programs like SNAP, Medicaid, and SCHIP, the average effective marginal tax rate could be 55 percent. Enrolling the family in additional waitlisted programs, like housing assistance and TANF, ratchets the rate up above 80 percent....

Some caveats are in order. A number of eligible households do not apply for benefits, such as the food subsidies for which they are eligible. We have performed some analyses of the population as a whole at the Urban Institute and find that the average rates across households will be lower than what you see in the table because of less than full participation in the programs. By the same token, we have not included the child care grants in these calculations. Add those in, and the rate can exceed 100 percent.

we calculate the effective average marginal tax rate if this household increases its income from $10,000 to $40,000. That is, how much of the additional $30,000 of earnings is lost to government through direct taxes or loss of benefits? The average marginal tax rate in the first bar of Table 3, 29 percent, is based simply on federal and state direct taxes, including Social Security and the EITC. The rate rises appreciably as the family enrolls in additional transfer programs in bars 2 and 3. For a family enrolled in all the more universal non-wait-listed programs like SNAP, Medicaid, and SCHIP, the average effective marginal tax rate could be 55 percent. Enrolling the family in additional waitlisted programs, like housing assistance and TANF, ratchets the rate up above 80 percent....

Some caveats are in order. A number of eligible households do not apply for benefits, such as the food subsidies for which they are eligible. We have performed some analyses of the population as a whole at the Urban Institute and find that the average rates across households will be lower than what you see in the table because of less than full participation in the programs. By the same token, we have not included the child care grants in these calculations. Add those in, and the rate can exceed 100 percent.

Thursday, July 12, 2012

HP Filters and Potential Output

The Hodrick-Prescott (HP) filter is used to detrend time series. This approach was introduced to economists in the work of Kydland and Prescott, it became part of the toolbox used by people who worked in the real business cycle literature, and its use spread from there. Hodrick and Prescott's paper was unpublished for a long time, but appeared eventually in the Journal of Money, Credit, and Banking.

In studying the cyclical behavior of economic time series, one has to take a stand on how to separate the cyclical component of the time series from the trend component. One approach is to simply fit a linear trend to the time series (typically in natural logs for prices and quantities). The problem with this is that there are typically medium-run changes in growth trends (e.g. real GDP grew at a relatively high rate in the 1960s, and at a relatively low rate from 2000-2012). If we are interested in variation in the time series only at business cycle frequencies, we should want to take out some of that medium-run variation. This requires that we somehow allow the growth trend to change over time. That's essentially what the HP filter does.

You can find a description of the HP filter on page 3 of Hodrick and Prescott's paper. The HP filter takes an economic time series y(t), and fits a trend g(t) to that raw time series, by solving a minimization problem. The trend g(t) is chosen to minimize the sum of squared deviations of y(t) from g(t), plus the sum of squared second differences, weighted by a smoothing parameter L (the greek letter lambda in the paper). The minimization problem penalizes changes in the growth trend, with the penalty increasing as L increases. The larger is L, the smoother will be the trend g(t).

Clearly, the choice of L is critical when one is using the HP filter. L=0 implies that that g(t)=y(t) and there are no deviations from trend, and L=infinity gives a linear trend. Kydland and Prescott used L=1600 for quarterly data, and that stuck. It's obviously arbitrary, but it produces deviations from trend that don't violate the eyeball metric.

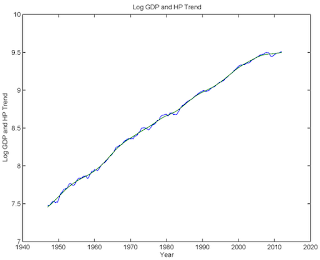

Here's what happens when you HP filter the quarterly real GDP data available on FRED. The first chart shows the log of real GDP and the HP trend. You can see in the chart how the growth trend changes over time to fit the time series.

The next chart shows the deviations of real GDP from the HP trend. This last picture doesn't grossly violate the eyeball metric, as it seems more or less consistent with what we think we know about business cycles. The large negative deviations from trend more or less match up with how the NBER defines the business cycle. Of course the NBER committee that dates business cycles is a group of human beings, and the HP filter is just a statistical technique, but it is reassuring if the two approaches are in the same ballpark.

Kydland and Prescott's approach to studying business cycles was to: (i) Define the raw time series that we are trying to explain as the deviations of actual time series from HP trends. (ii) Simulate a calibrated model on the computer to produce artificial time series. (iii) HP filter the artificial time series and ask whether these time series look much like the raw time series we are trying to explain.

Here's what Paul Krugman has to say about HP filters:

What is the economy's "underlying potential" anyway? It's the level of aggregate real GDP that we could achieve if, within the set of feasible economic policies, policymakers were to choose the policy that maximizes aggregate economic welfare. The HP trend is no more a measure of potential than is a linear trend fit to the data. The HP trend was arrived at through a purely statistical procedure. I did not use any economics to arrive at the two charts above - only a few lines of code. How then could the HP trend be a measure of potential GDP?

To measure potential GDP requires a model. The model will define for us what "feasible economic policies" and "aggregate economic welfare" are. If we used Kydland and Prescott's procedure, above, we might construct a model, calibrate and simulate it, and argue that the model produces time series that fit the actual data. We might then feel confident that we have a good model, and use that model to measure potential output. Maybe the model we fit to the data is a Keyesian model, which implies an active role for monetary and fiscal policy. Maybe it's a model with a well-articulated banking and financial sector, with an explicit role for monetary policy.

If the model is Kydland and Prescott's, there is a clear answer to what potential is - it's actual GDP (and certainly not the HP trend). That model doesn't have a government in it, and was not intended for thinking about policy. What Kydland and Prescott's work does for us, though, is to allow us to consider the possibility that, for some or all business cycle events, there may be nothing we can or should do about them.

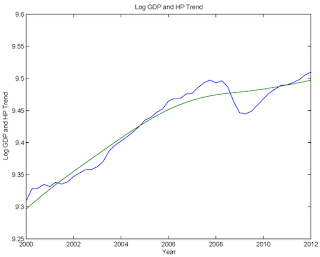

But how should we think about potential GDP in the current context? In the second chart above, one curious feature of the deviations from the HP trend is that the most recent recession (deviation from trend close to -4%) appears to be less severe than the 1981-82 recession (deviation from trend close to -5%). This may not be consistent with what we know about the last recession from looking at other data. The next chart shows the log of real GDP and the HP trend since 2000. In its attempt to fit the actual time series, the HP filter has done away with part of what we might want to think of as the recession, and real GDP in the first quarter of 2012 was more than 1% above trend.

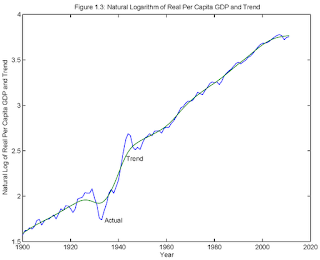

You can see we have to be careful with the HP filter, if we are looking at highly persistent events. You can see this even more clearly for the Great Depression. This next chart is from a revision I am doing for my textbook, showing annual real GDP per capita, with an HP trend (L=100) fit to the actual time series. You can see how the trend moves around substantially in response to the GDP data during the Great Depression and World War II - probably more than you might want it to. Of course that depends on how the smoothing parameter L is chosen. Look at the chart in Krugman's blog post. He's misusing the HP filter here in two ways (though of course what he wants to do is make people who use it look stupid). First, he's using a value for L that's just too small. Second, he's using it to fit a highly persistent event.

Back to recent events. What happens if we fit a linear trend to the post-1947 real GDP data? The next chart shows actual real GDP and the linear trend, post-2000. Here, real GDP falls below trend in early 2006, and is about 14% below trend in first quarter 2012. Remember that the HP trend is about 1% below actual GDP.

Do either of these trend measures (linear, HP) correspond to official measures of potential output? The next chart shows the Congressional Budget Office's measure of potential GDP, along with actual GDP, both in natural logs. According to the CBO measure, real GDP is currently below potential by 5.5% - somewhere between what the HP trend and linear trend give you. But does CBO potential output do any better than trend-fitting in capturing what we should be measuring? Absolutely not. The CBO does not have a fully-articulated macroeconomic model that captures the role of economic policy. At best, what they seem to be doing is estimating potential based on an aggregate production function and long-term trends in the labor and capital inputs, and in total factor productivity. What we need, in the current context, are measures of the inefficiencies caused by various frictions, and a representation of how fiscal and monetary policies may or may not be able to work against those frictions.

Here's another way of looking at the data. The next two charts show the paths of real GDP and employment (CPS data) during the 1981-82 recession and the 2008-09 recession. In each chart, I normalize the observation at the NBER peak to 100. What's interesting here (if you didn't already know) is the slow recovery in the last recession relative to the earlier one, and the decrease in employment in the recent recession. In the 1981-82 recession, employment falls by a small amount, then resumes robust growth after 6 quarters. In the recent recession, employment falls by much more, and is still in the toilet after 12 quarters.

Here is a set of explanations that I have heard for the recent behavior of economic time series:

(i) Wages and prices are sticky.

(ii) There is a debt overhang. Consumers accumulated a lot of debt post-2000, the recession has compromised their ability to service that debt, and they have reduced consumption expenditures substantially.

(iii) Consumers and firms are anticipating higher taxes in the future.

(iv) Sectoral reallocation has caused mismatch in the labor market.

(v) Capacity has been reduced by a loss of wealth, or perhaps more specifically, collateralizable wealth.

Sticky wages and prices: Come on. The recession began in fourth quarter 2007, according to the NBER. How can we be suffering the effects of stuck wages and prices in mid-2012? The 1981-82 recession occurred in the midst of a rapid disinflation, from close to 15% (CPI inflation) in early 1980 to 2.5%, post-recession. If there was a time when wage and price stickiness would matter, that would be it. But, as you can see from the last two charts, the 1981-82 recession was short compared to the recent one, with a robust recovery.

Debt Overhang: Again, think about the 1981-82 recession. The unanticipated disinflation would have made a lot of debts much higher in real terms than anticipated. If you weren't seeing the debt overhang effect in the 1981-82 recession, why are you seeing it now?

Higher future taxes: Look at Canada. There may be a Conservative government there, but they seem committed to social insurance and relatively high taxes. And Canada is gaining ground on the US in the GDP per capita competition.

Sectoral Reallocation: This paper by Sahin and coauthors measures mismatch unemployment. They find it's significant, though it's not explaining all of the increased unemployment in the US (maybe a third).

Capacity from wealth (collateral): I like this one. Jim Bullard talked about this, though I think the correct story has to do with collateral specifically, rather than wealth. For example, the value of the housing stock is important, as it not only supports mortgage lending, but a stock of mortgage-backed securities that are (or were) widely-used as collateral in financial markets. One dollar in real estate value could support a multiple of that in terms of credit contracts in various markets.

Thus, the factors that I think potentially can give us the most mileage in explaining what is going on are ones which are not well-researched. We know a little bit about the mismatch problem, but have really only scratched the surface. What makes credit markets dysfunctional is still not well-understood. We have plenty of different models, and a lot to sort out.

Here's a summary of an interview with Jeff Lacker, President of the Richmond Fed. Jeff says:

The Fed should feel free to interpret the Humphrey Hawkins Act in whatever way makes the most economic sense. If I define potential GDP as I did above, then maximum employment is whatever level of employment can be achieved if optimal economic policies are pursued, within feasibility constraints. From the point of view of the central bank, there is nothing to be done. Even if you thought that all the factors (i)-(v) above are important, fiscal policy is a constraint for the central bank, and there is nothing to be done on the monetary policy front, for reasons discussed here. Whether real GDP is above or below some trend measure, or above or below CBO potential output is currently irrelevant to how the Fed should think about "maximum employment." We're there.

In studying the cyclical behavior of economic time series, one has to take a stand on how to separate the cyclical component of the time series from the trend component. One approach is to simply fit a linear trend to the time series (typically in natural logs for prices and quantities). The problem with this is that there are typically medium-run changes in growth trends (e.g. real GDP grew at a relatively high rate in the 1960s, and at a relatively low rate from 2000-2012). If we are interested in variation in the time series only at business cycle frequencies, we should want to take out some of that medium-run variation. This requires that we somehow allow the growth trend to change over time. That's essentially what the HP filter does.

You can find a description of the HP filter on page 3 of Hodrick and Prescott's paper. The HP filter takes an economic time series y(t), and fits a trend g(t) to that raw time series, by solving a minimization problem. The trend g(t) is chosen to minimize the sum of squared deviations of y(t) from g(t), plus the sum of squared second differences, weighted by a smoothing parameter L (the greek letter lambda in the paper). The minimization problem penalizes changes in the growth trend, with the penalty increasing as L increases. The larger is L, the smoother will be the trend g(t).

Clearly, the choice of L is critical when one is using the HP filter. L=0 implies that that g(t)=y(t) and there are no deviations from trend, and L=infinity gives a linear trend. Kydland and Prescott used L=1600 for quarterly data, and that stuck. It's obviously arbitrary, but it produces deviations from trend that don't violate the eyeball metric.

Here's what happens when you HP filter the quarterly real GDP data available on FRED. The first chart shows the log of real GDP and the HP trend. You can see in the chart how the growth trend changes over time to fit the time series.

The next chart shows the deviations of real GDP from the HP trend. This last picture doesn't grossly violate the eyeball metric, as it seems more or less consistent with what we think we know about business cycles. The large negative deviations from trend more or less match up with how the NBER defines the business cycle. Of course the NBER committee that dates business cycles is a group of human beings, and the HP filter is just a statistical technique, but it is reassuring if the two approaches are in the same ballpark.

Kydland and Prescott's approach to studying business cycles was to: (i) Define the raw time series that we are trying to explain as the deviations of actual time series from HP trends. (ii) Simulate a calibrated model on the computer to produce artificial time series. (iii) HP filter the artificial time series and ask whether these time series look much like the raw time series we are trying to explain.

Here's what Paul Krugman has to say about HP filters:

When applied to business cycles, the HP filter finds a smoothed measure of real GDP, which is then taken to represent the economy’s underlying potential, with deviations from this smoothed measure representing unsustainable temporary deviations from potential.I'm not sure if this is just Krugman's misunderstanding, or if this is widespread. In any case, it seems important to correct the misunderstanding.

What is the economy's "underlying potential" anyway? It's the level of aggregate real GDP that we could achieve if, within the set of feasible economic policies, policymakers were to choose the policy that maximizes aggregate economic welfare. The HP trend is no more a measure of potential than is a linear trend fit to the data. The HP trend was arrived at through a purely statistical procedure. I did not use any economics to arrive at the two charts above - only a few lines of code. How then could the HP trend be a measure of potential GDP?

To measure potential GDP requires a model. The model will define for us what "feasible economic policies" and "aggregate economic welfare" are. If we used Kydland and Prescott's procedure, above, we might construct a model, calibrate and simulate it, and argue that the model produces time series that fit the actual data. We might then feel confident that we have a good model, and use that model to measure potential output. Maybe the model we fit to the data is a Keyesian model, which implies an active role for monetary and fiscal policy. Maybe it's a model with a well-articulated banking and financial sector, with an explicit role for monetary policy.

If the model is Kydland and Prescott's, there is a clear answer to what potential is - it's actual GDP (and certainly not the HP trend). That model doesn't have a government in it, and was not intended for thinking about policy. What Kydland and Prescott's work does for us, though, is to allow us to consider the possibility that, for some or all business cycle events, there may be nothing we can or should do about them.

But how should we think about potential GDP in the current context? In the second chart above, one curious feature of the deviations from the HP trend is that the most recent recession (deviation from trend close to -4%) appears to be less severe than the 1981-82 recession (deviation from trend close to -5%). This may not be consistent with what we know about the last recession from looking at other data. The next chart shows the log of real GDP and the HP trend since 2000. In its attempt to fit the actual time series, the HP filter has done away with part of what we might want to think of as the recession, and real GDP in the first quarter of 2012 was more than 1% above trend.

You can see we have to be careful with the HP filter, if we are looking at highly persistent events. You can see this even more clearly for the Great Depression. This next chart is from a revision I am doing for my textbook, showing annual real GDP per capita, with an HP trend (L=100) fit to the actual time series. You can see how the trend moves around substantially in response to the GDP data during the Great Depression and World War II - probably more than you might want it to. Of course that depends on how the smoothing parameter L is chosen. Look at the chart in Krugman's blog post. He's misusing the HP filter here in two ways (though of course what he wants to do is make people who use it look stupid). First, he's using a value for L that's just too small. Second, he's using it to fit a highly persistent event.

Back to recent events. What happens if we fit a linear trend to the post-1947 real GDP data? The next chart shows actual real GDP and the linear trend, post-2000. Here, real GDP falls below trend in early 2006, and is about 14% below trend in first quarter 2012. Remember that the HP trend is about 1% below actual GDP.

Do either of these trend measures (linear, HP) correspond to official measures of potential output? The next chart shows the Congressional Budget Office's measure of potential GDP, along with actual GDP, both in natural logs. According to the CBO measure, real GDP is currently below potential by 5.5% - somewhere between what the HP trend and linear trend give you. But does CBO potential output do any better than trend-fitting in capturing what we should be measuring? Absolutely not. The CBO does not have a fully-articulated macroeconomic model that captures the role of economic policy. At best, what they seem to be doing is estimating potential based on an aggregate production function and long-term trends in the labor and capital inputs, and in total factor productivity. What we need, in the current context, are measures of the inefficiencies caused by various frictions, and a representation of how fiscal and monetary policies may or may not be able to work against those frictions.

Here's another way of looking at the data. The next two charts show the paths of real GDP and employment (CPS data) during the 1981-82 recession and the 2008-09 recession. In each chart, I normalize the observation at the NBER peak to 100. What's interesting here (if you didn't already know) is the slow recovery in the last recession relative to the earlier one, and the decrease in employment in the recent recession. In the 1981-82 recession, employment falls by a small amount, then resumes robust growth after 6 quarters. In the recent recession, employment falls by much more, and is still in the toilet after 12 quarters.

Here is a set of explanations that I have heard for the recent behavior of economic time series:

(i) Wages and prices are sticky.

(ii) There is a debt overhang. Consumers accumulated a lot of debt post-2000, the recession has compromised their ability to service that debt, and they have reduced consumption expenditures substantially.

(iii) Consumers and firms are anticipating higher taxes in the future.

(iv) Sectoral reallocation has caused mismatch in the labor market.

(v) Capacity has been reduced by a loss of wealth, or perhaps more specifically, collateralizable wealth.

Sticky wages and prices: Come on. The recession began in fourth quarter 2007, according to the NBER. How can we be suffering the effects of stuck wages and prices in mid-2012? The 1981-82 recession occurred in the midst of a rapid disinflation, from close to 15% (CPI inflation) in early 1980 to 2.5%, post-recession. If there was a time when wage and price stickiness would matter, that would be it. But, as you can see from the last two charts, the 1981-82 recession was short compared to the recent one, with a robust recovery.

Debt Overhang: Again, think about the 1981-82 recession. The unanticipated disinflation would have made a lot of debts much higher in real terms than anticipated. If you weren't seeing the debt overhang effect in the 1981-82 recession, why are you seeing it now?

Higher future taxes: Look at Canada. There may be a Conservative government there, but they seem committed to social insurance and relatively high taxes. And Canada is gaining ground on the US in the GDP per capita competition.

Sectoral Reallocation: This paper by Sahin and coauthors measures mismatch unemployment. They find it's significant, though it's not explaining all of the increased unemployment in the US (maybe a third).

Capacity from wealth (collateral): I like this one. Jim Bullard talked about this, though I think the correct story has to do with collateral specifically, rather than wealth. For example, the value of the housing stock is important, as it not only supports mortgage lending, but a stock of mortgage-backed securities that are (or were) widely-used as collateral in financial markets. One dollar in real estate value could support a multiple of that in terms of credit contracts in various markets.

Thus, the factors that I think potentially can give us the most mileage in explaining what is going on are ones which are not well-researched. We know a little bit about the mismatch problem, but have really only scratched the surface. What makes credit markets dysfunctional is still not well-understood. We have plenty of different models, and a lot to sort out.

Here's a summary of an interview with Jeff Lacker, President of the Richmond Fed. Jeff says:

Given what’s happened to this economy, I think we’re pretty close to maximum employment right now.The "dual mandate" the Fed operates under includes language to the effect that the Fed should try to achieve maximum employment. Lacker says we're there, and I'm inclined to agree with him.

The Fed should feel free to interpret the Humphrey Hawkins Act in whatever way makes the most economic sense. If I define potential GDP as I did above, then maximum employment is whatever level of employment can be achieved if optimal economic policies are pursued, within feasibility constraints. From the point of view of the central bank, there is nothing to be done. Even if you thought that all the factors (i)-(v) above are important, fiscal policy is a constraint for the central bank, and there is nothing to be done on the monetary policy front, for reasons discussed here. Whether real GDP is above or below some trend measure, or above or below CBO potential output is currently irrelevant to how the Fed should think about "maximum employment." We're there.

Wednesday, July 11, 2012

A Tribute to Anna Schwartz

Here are some words from David Romer, spoken at the beginning of the NBER Summer Institute, Monetary Economics program:

Before we begin, we have a sad milestone to note. Anna Schwartz, who was a towering figure not just in the Monetary Economics program of the NBER, but in the field of monetary economics, died last month.

The usual way to mourn someone’s passing is with a moment of silence. I think everyone who knew Anna even a little realizes that that would be absolutely the wrong way to remember her. So instead, let’s remember her this week by being loud, forceful, and argumentative, and by interrupting one another when we feel really strongly about something. To honor her, we also need to keep our discussions and debates focused on the substantive questions at hand and firmly grounded in the evidence. And we need to be flexible and open-minded, willing to cheerfully change our minds even if it’s about a position we’ve argued for tenaciously for decades – as Anna did on the question of whether targeting monetary aggregates is a good way to conduct monetary policy.

But to truly honor Anna, what you need to do is to go back to your university or wherever you work after the conference is over, and do work that’s so damn good that it changes the way we think about basic questions in macroeconomics, and that’s so damn careful and thorough that fifty years from now, it’s still the first place that people look when they want to learn about an issue that your work addresses.

And, you’ll keep doing that work for decades. To put Anna’s research longevity in perspective, if you’re currently finishing your second year in graduate school, you’re probably about the same age that Anna was when she published her first paper. To match Anna’s research longevity, you’ll need to stay actively involved in important research until about 2080.

With those lofty goals in mind, let’s turn to the conference program.

Before we begin, we have a sad milestone to note. Anna Schwartz, who was a towering figure not just in the Monetary Economics program of the NBER, but in the field of monetary economics, died last month.

The usual way to mourn someone’s passing is with a moment of silence. I think everyone who knew Anna even a little realizes that that would be absolutely the wrong way to remember her. So instead, let’s remember her this week by being loud, forceful, and argumentative, and by interrupting one another when we feel really strongly about something. To honor her, we also need to keep our discussions and debates focused on the substantive questions at hand and firmly grounded in the evidence. And we need to be flexible and open-minded, willing to cheerfully change our minds even if it’s about a position we’ve argued for tenaciously for decades – as Anna did on the question of whether targeting monetary aggregates is a good way to conduct monetary policy.

But to truly honor Anna, what you need to do is to go back to your university or wherever you work after the conference is over, and do work that’s so damn good that it changes the way we think about basic questions in macroeconomics, and that’s so damn careful and thorough that fifty years from now, it’s still the first place that people look when they want to learn about an issue that your work addresses.

And, you’ll keep doing that work for decades. To put Anna’s research longevity in perspective, if you’re currently finishing your second year in graduate school, you’re probably about the same age that Anna was when she published her first paper. To match Anna’s research longevity, you’ll need to stay actively involved in important research until about 2080.

With those lofty goals in mind, let’s turn to the conference program.

Tuesday, July 10, 2012

Saturday, July 7, 2012

Thursday, July 5, 2012

Tuesday, July 3, 2012

Reply to Sumner's Reply

Scott Sumner replies here to my last post. Some comments:

1. I've discussed at length why I think QE is irrelevant under the current circumstances, most recently here. If the Fed could tax reserves, that would certainly matter, as Sumner points out, but that's not permitted in the United States, and so is not a practical option. Sumner has no special claim to be rooted in the "real world" here. I'm looking at the same real world he is. However, I think I'm more willing to think about the available theory. Apparently he doesn't think that's useful. The key problem under the current circumstances is that you can't just announce an arbitrary NGDP target and hit it with wishful thinking. The Fed needs some tools, and in spite of what Ben Bernanke says, it doesn't have them.

2. Sumner is correct about the change in views of central banks that occurred post-1970s. However, Volcker needed courage to change course. He faced a lot of opposition from within the Fed and without. I'm more cynical about the way central banks adopted New Keynesian economics later on. Rightly or wrongly, this wasn't stuff that was challenging what they were doing - more like an exercise in reverse engineering. How do we write down a framework that justifies the status quo?

3. Sumner says:

4. Friedman rule: My conjecture for nominal interest rate smoothing I guess reflects a view that the intertemporal Friedman rule distortions could be more important than the sticky price/wage distortions. Further, it's not clear an optimal policy in a sticky price/wage model is going to imply a lot of variability in the nominal interest rate.

5.

1. I've discussed at length why I think QE is irrelevant under the current circumstances, most recently here. If the Fed could tax reserves, that would certainly matter, as Sumner points out, but that's not permitted in the United States, and so is not a practical option. Sumner has no special claim to be rooted in the "real world" here. I'm looking at the same real world he is. However, I think I'm more willing to think about the available theory. Apparently he doesn't think that's useful. The key problem under the current circumstances is that you can't just announce an arbitrary NGDP target and hit it with wishful thinking. The Fed needs some tools, and in spite of what Ben Bernanke says, it doesn't have them.

2. Sumner is correct about the change in views of central banks that occurred post-1970s. However, Volcker needed courage to change course. He faced a lot of opposition from within the Fed and without. I'm more cynical about the way central banks adopted New Keynesian economics later on. Rightly or wrongly, this wasn't stuff that was challenging what they were doing - more like an exercise in reverse engineering. How do we write down a framework that justifies the status quo?

3. Sumner says:

...RGDP fluctuations at cyclical frequencies are assumed to be suboptimal, and are assumed to generate large welfare losses—particularly when generated by huge NGDP shocks, as in the early 1930s.My point in looking at seasonally adjusted nominal GDP was to point out that fluctuations in nominal GDP can't be intrinsically bad. I think we all recognize that seasonal variation in NGDP is something that policy need not be doing anything to eliminate. So how do we know that we want to eliminate this variation at business cycle frequencies? In contrast to what Sumner states, it is widely recognized that some of the business cycle variability in RGDP we observe is in fact not suboptimal. Most of what we spend our time discussing (or fighting about) is the nature and quantitative significance of the suboptimalities. Sumner seems to think (like old-fashioned quantity theorists), that there is a sufficient statistic for subomptimality - in this case NGDP. I don't see it.

4. Friedman rule: My conjecture for nominal interest rate smoothing I guess reflects a view that the intertemporal Friedman rule distortions could be more important than the sticky price/wage distortions. Further, it's not clear an optimal policy in a sticky price/wage model is going to imply a lot of variability in the nominal interest rate.

5.

I’m kind of perplexed as to why Williamson calls himself a “New Monetarist.”Randy Wright talked me into it. It's as good a name as any. We like some things about Milton Friedman, but some things we don't like - 100% reserve requirement, his need to separate assets into money and not-money. We're a lot more interested in theory too.

Pigou Club news

From my inbox:

I thought you might be amused to see this. At Metrovino, a restaurant in Portland, Oregon where I run the bar, we needed a name for a drink we serve that's a slight variation on the Pegu Club (a classic gin cocktail). "Pigou Club" was the first thing to come to mind. I doubt many guests know what the name alludes to but it makes me happy and the drink has become one of our bestsellers. A photo of the menu is attached. The recipe and background are here.

Also, by the way, Australia has joined the club, amidst significant controversy.

Monday, July 2, 2012

Some Doubts About NGDP Targeting

Nominal GDP (NGDP) targeting as a monetary policy rule was first proposed in the 1980s, the most prominent proponent being Bennett McCallum. The NGDP target was a direct descendant of the money growth target - Milton Friedman's proposal from 1968. Of course, money growth targeting was adopted by many central banks in the world in the 1970s and 1980s. It's rare to hear central bankers mention monetary aggregates in public these days. Why? Constant money growth rules failed miserably, as one central building block of the quantity theory approach - the stable money demand function - does not exist.

McCallum's reasoning (and here I may be taking some liberties - I'm working from memory) was basically the following. We all know that MV=PY. That's the equation of exchange - an identity. M is the nominal money stock, however measured, P is the price level, and Y is real GDP. Thus, PY is nominal income. V is the velocity of money, which is defined to be the ratio of nominal income to the money stock. That's what makes that equation an identity. A typical quantity theory approach to money demand (for example read some of Lucas's money demand work) is to assume that the income elasticity of money demand is one, so from the equation of exchange, the theory of money demand reduces to a theory that explains V. Friedman would have liked V to be predictable. The problem is that it's not. Technology changes. Regulations change. Large unanticipated events happen in financial markets and payments systems. As a result, there is considerable unpredictable variation in V, at both low and high frequencies.

So, McCallum looked at the equation of exchange and thought: The central bank controls M, but if M is growing at a constant rate and V is highly variable, then PY is bouncing all over the place. Why not make PY grow at a constant rate, and have the central bank move M to absorb the fluctuations in V? As economists we can disagree about how growth in nominal income will be split between growth in P and growth in Y, depending in part on our views about the sources and extent of non-neutralities of money. But, McCallum reasoned, NGDP targeting seems agnostic. According to him, we don't really have to fuss with the complications of what the non-neutralities are, or whether non-monetary factors are to some extent driving business cycles.

Central bankers and macroeconomists did not pay much attention to NGDP targeting. To the extent that central banks adopted explicit policy rules, those were inflation targets, for example in New Zealand, Australia, Canada, the UK, and elsewhere. The reasoning behind this seemed to be that, central banks get in trouble when they become overly focused on "real" goals, e.g. the level of real GDP, the unemployment rate, etc. In an overconfident attempt to "stimulate" the economy, the central bank may just stimulate inflation, and then have to backtrack. Producing a sustained low inflation rate when that rate has been high for a long time produces a recession, as in the U.S. in 1981-82. But if the central bank commits to a low inflation rate forever, we can get the benefits of low inflation and less real instability to boot.

An early 1990s development was the Taylor rule, which became a component of New Keynesian models, and crept into the language of central bankers. American central bankers find the Taylor rule particularly appealing. Their past behavior appears to fit the rule, so it does not dictate they do anything different. Great! As well, the Taylor rule seems to conform to the intent of Congress's dual mandate.

The Taylor rule takes as given the operating procedure of the Fed, under which the FOMC determines a target for the overnight federal funds rate, and the job of the New York Fed people who manage the System Open Market Account (SOMA) is to hit that target. The Taylor rule, if the FOMC follows it, simply dictates how the fed funds rate target should be set every six weeks, given new information.

So, from the mid-1980s until 2008, everything seemed to be going swimmingly. Just as the inflation targeters envisioned, inflation was not only low, but we had a Great Moderation in the United States. Ben Bernanke, who had long been a supporter of inflation targeting, became Fed Chair in 2006, and I think it was widely anticipated that he would push for inflation targeting with the US Congress.

In 2008, of course, the ball game changed. If you thought economists and policymakers were in agreement about how the world works, or about what appropriate policy is, maybe you were surprised. One idea that has been pushed recently is a revived proposal for NGDP targeting. The economists pushing this are bloggers - Scott Sumner and David Beckworth, among others. Some influential people like the idea, including Paul Krugman, Brad DeLong, and Charles Evans.

In its current incarnation, here's how NGDP targeting would work, according to Scott Sumner. The Fed would set a target path for future NGDP. For example, the Fed could announce that NGDP will grow along a 5% growth path forever (say 2% for inflation and 3% for long run real GDP growth). Of course, the Fed cannot just wish for a 5% growth path in NGDP and have it happen. All the Fed can actually do is issue Fed liabilities in exchange for assets, set the interest rate on reserves, and lend at the discount window. One might imagine that Sumner would have the Fed conform to its existing operating procedure and move the fed funds rate target - Taylor rule fashion - in response to current information on where NGDP is relative to its target. Not so. Sumner's recommendation is that we create a market in claims contingent on future NGDP - a NGDP futures market - and that the Fed then conduct open market operations to achieve a target for the price of one of these claims.

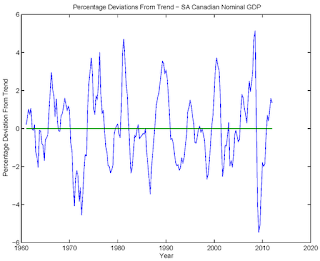

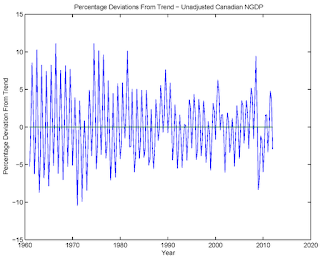

So, what do we make of this? If achieving a NGDP target is a good thing, then variability about trend in NGDP must be bad. So how have we been doing? The first chart shows HP-filtered nominal and real GDP for the US. You're looking at percentage deviations from trend in the two time series. The variability of NGDP about trend has been substantial in the post-1947 period - basically on the order of variability about trend in real GDP. You'll note that the two HP-filtered time series in the chart follow each other closely. If we were to judge past monetary policy performance by variability in NGDP, that performance would appear to be poor. What's that tell you? It will be a cold day in hell when the Fed adopts NGDP targeting. Just as the Fed likes the Taylor rule, as it confirms the Fed's belief in the wisdom of its own actions, the Fed will not buy into a policy rule that makes its previous actions look stupid.

There's another interesting feature of the first chart. Note that, during the 1970s, variability in NGDP about trend was considerably smaller than for real GDP. But after the 1981-82 recession and before the 2008-09 recession, detrended NGDP hugs detrended real GDP closely. But the first period is typically judged to be a period of bad monetary policy and the latter a period of good monetary policy.

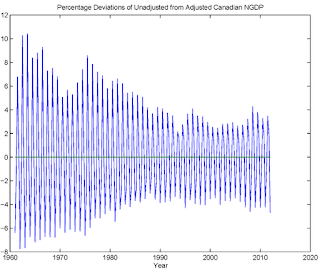

Here's another problem. There is a substantial source of variability in real and nominal GDP that we rarely think about, as we are always staring at seasonally adjusted data. Indeed, the Bureau of Economic Analysis makes it really hard to stare at unadjusted National Income Accounts data. I couldn't unearth it, and had to resort to Statistics Canada which, in their well-ordered Canadian fashion, puts all of these numbers where you expect to find them. The next chart shows the natural logs of Canadian nominal GDP, seasonally adjusted and unadjusted. The seasonal variation in unadjusted NGDP is pretty clear in that picture, but to get an idea of the magnitude, the next two charts show HP-filtered seasonally adjusted and unadjusted Canadian NGDP, respectively.

The first chart looks roughly like what you would see for the same period in the US. Typical deviations from trend at business cycle frequencies range from 2% to 5%. In the unadjusted series, though, the deviations from trend are substantially larger - typically from 5% to 10%.

The second chart is interesting, as you can see both the seasonal variation and the cyclical variation in NGDP. If we want just the percentage deviations of unadjusted NGDP from seasonally adjusted NGDP, we get the next chart. There's a substantial amount of variation there - on the order of what we see at business cycle frequencies.